Build a use case for AI to drive smart automation in B2C Consumer Products Manufacturing

In my last blog of this 2-part series, I emphasized the necessity of writing a use case to determine if AI could be applied to your B2B business processes. I also explained that smart automation can eliminate redundant, manual B2B tasks and dramatically reduce errors.

After reading many articles, attending many webinars and observing many conversations, it’s apparent that there is minimal information on “HOW” AI can be used with shoppers. Today, I would like to provide information on “HOW” AI can be utilized in the B2C route to market.

Jumping hurdles

Everything is connected and the Internet of Things (IoT) has led to some pretty savvy shoppers, driven by influencers, beyond the traditional merchandise conditions found in stores. In-store temporary price reductions and features and displays of new and existing products are not enough to influence the shopper to purchase a product. Today’s shopper is influenced by multiple media sources and countless ads containing links to ecommerce sites so shoppers can browse and put items in a virtual cart only to leave it behind. In addition to incenting the savvy shopper, there is a lot to understand in relation to new products coming into the market.

New product launches can be a huge risk for CP manufacturers like you in terms of cost and the possibility of failure. Where will the shopper purchase the product, online or in a physical store? To answer these questions, data needs to be captured, cleansed, stored and analyzed. This leads us to the next big hurdle.

The term, big data, has been used since the 1990s when the dot-com boom in Silicon Valley really popularized the term. Big data usually includes data sets that are far larger than common software tools are built to capture, cleanse, manage and process within a convenient timeframe. Big data focuses on unstructured, semi-structured and structured data sets. However, its primary focus is on unstructured data. The size of big data is a constantly moving target; ranging from a few dozen terabytes to many zettabytes of data. Forms of big data include retailer loyalty data, retailer point of sale data, syndicated data, and panel data, and it continues to grow.

In addition to big data on the shopper, you have a vast amount of external data sources to manage. For example, internal supply chain data can be siloed in different software applications making it difficult to mine. There are multiple data providers that track and provide sell-in and sell-out volume for wholesalers and indirect retailers. The list is almost endless in terms of data points and requires the creation of an integration to the source, cleansing and finally centralizing all the data to a data lake. Once you have centralized all the data – what’s next?

Data science is working behind the scenes in today’s Trade Promotion Optimization (TPO) capability. Data scientists create and train the algorithms to model historical data points and predict the volume purchased based on 5 particular or overlapping merchandise conditions. A TPO application takes the volume, calculates and allows the user to identify the best promotion to run, optimizing their trade spend by spending less and delivering more profit. However, when you add our savvy shopper to the equation, who is looking for something beyond the traditional merchandise conditions, these promotions no longer work.

Jumping ahead

Both CP Manufacturers and Retailers need a consumer-focused plan, which incorporates the consumer big data points gathered, identified, stored, cleansed and centralized into a data lake. This way, the data science algorithm is not just modeling 5 data points to predict the incremental volume but instead, it relies on combinations of merchandise conditions plus frequency and depth. The model may contain as many as 25 data points to identify consumer trends creating the next generation of shopper-specific promotions. Moreover, it creates customer experiences and drives revenue by helping shoppers find the products that best match the attributes they’re looking for today rather than only focusing on what they have purchased in the past.

Incorporating all of these data points provides a predictive capability. Data scientists must now create a Large Language Model (LLM), and this is where AI comes into play. The LLM is an AI network that trains the captured data input/output, frequently and quickly. It identifies text that could be unlabeled or uncategorized by running it through the complex model. The LLM uses AI to self-supervise or semi-supervise the learning methodology. Information/content is ingested or entered into the LLM, and the output is the algorithm that predicts the savvy shopper's next move.

Hyperpersonalized shopping: You can dynamically improve customer experiences for the savvy shopper and drive revenue by helping shoppers find products that most closely match the attributes they’re looking for today rather than only basing it on past purchases.

- Create shopper-specific promotions with cross-product combinations via volume purchasing incentive discounts aligned with predicted or historic purchasing trends. Recommend new products based on purchasing trends or product or shopper constraints. This might include budget limitations or products that complement other products.

Your objective is to identify and alert the shopper when their favorite products will be going on promotion. AI can be used for streamlining the process of identifying and creating new products for ecommerce product catalogs. It can also help you identify more robust product descriptions to improve your SEO rankings.

The LLM can search competitor prices several times a day, analyze demand patterns and market trends or operational costs. It can also provide pricing recommendations in real time to avoid losing shoppers to competitors or make price adjustments to maximize profits. These examples are merely scratching the service on how you can satisfy the savvy shopper of today. What’s your next step and how can you be successful?

Get a jump start

If what you’ve read so far sounds good, here’s where you should begin your assessment with Advanced Analytics and Smart Automation.

AI and Advanced Analytics focus on these 3 critical areas:

- Developing the right talent base and operating model

- Ensuring effective data strategy and governance

- Building and rebuilding the right data and digital platforms

AI and Smart Automation of Advanced Analytics focus on these 3 areas:

- Collecting big data

- Managing big data

- Predicting consumer behavior

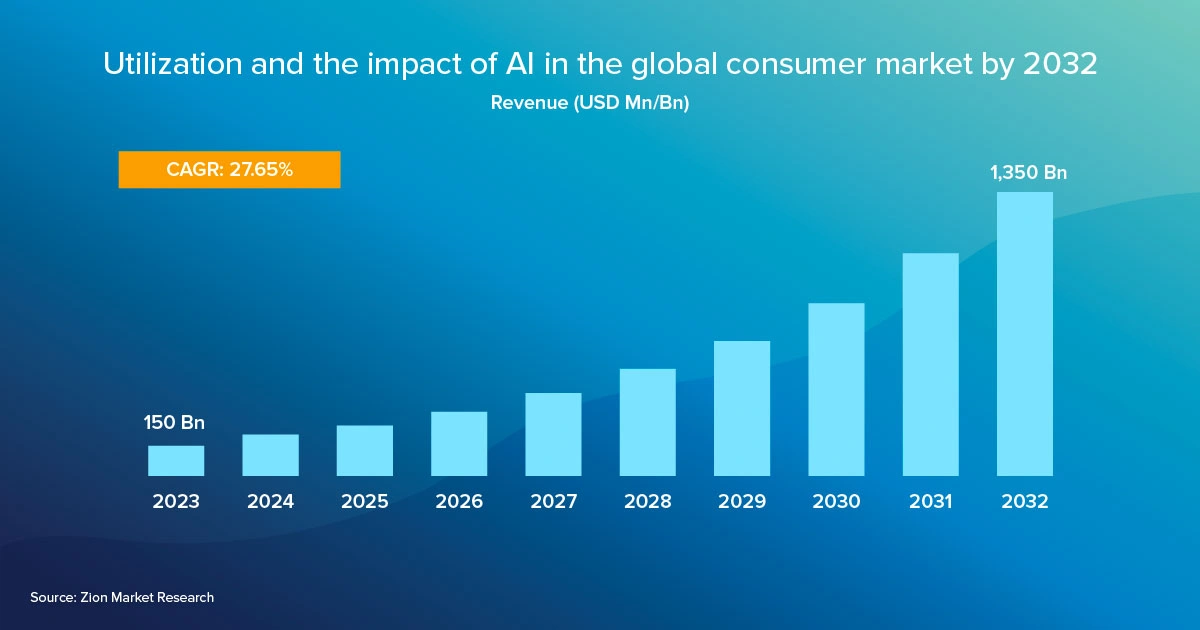

The time is now to evaluate whether AI is right for your organization. Assess whether it will assist with your RGM journey and be prepared for the day when it is time to incorporate it. In other words, do your homework now by creating a use case to set yourself up for success in the future. Can you really afford not to?

Get the latest news, updates, and exclusive insights from Vistex delivered straight to your inbox. Don’t miss out—opt in now and be the first to know!